In May of 2025, VentureBeat reported that productivity demands at top consultancies had already driven employees to create over 70,000 shadow AI applications. While “shadow AI” often includes the unsanctioned use of commercial AI offerings, in this case the study only considered a more problematic subset of applications: “These projections exclude one-off use of ChatGPT or Gemini in browser sessions. They reflect persistent apps and workflows built using APIs, scripting, or automated agents developed inside consulting teams.” Based on their interviews with experts at eleven consultancies, VentureBeat predicted there would be 100,000 of such apps within the year.

The risk of such an unmanaged attack surface expansion would be enormous—100,000 applications developed by subject matter amateurs, free from security oversight, processing client data for major consultancies. Surely such an increase in risky behavior would result in some data security consequences. So where are they?

The Streamlit framework, built to transform small collections of scripts into hosted web applications, has exposed the tip of this iceberg. In analyzing publicly accessible Streamlit apps, UpGuard has found thousands of cases of data leakage through shadow AI: lightweight AI projects, accessible without authentication, exposing confidential business data and personally identifiable information to anyone on the internet.

Making Data Apps with Streamlit

Streamlit is an open source framework for easily creating web applications out of scripts. “Turn your data scripts into shareable web apps in minutes. All in pure Python. No front‑end experience required.” As someone who also has lots of Python scripts and no front-end experience, that sounds great!

By facilitating the metamorphosis from Python script to web application, Streamlit makes it easier to move those scripts from one’s laptop, where they are not externally accessible, to a hosted environment, where they can be accessed over the internet.

Streamlit offers two options for hosting: either a user can provide their own, or they can host their app for free in Streamlit’s Community Cloud. The free hosting comes with the caveat that all apps in the Community Cloud are public. The user has the responsibility to understand and decline this option if their app has confidential data. On the other hand, self-hosting requires that the user correctly configure their application and network settings to correctly secure their data apps.

Tens of Thousands of Exposed Applications

Using internet-scanning software in October 2025, we identified 14,995 unique IP addresses running applications created with Streamlit. (Scanning engines like Shodan and Censys return more results when Streamlit apps are running on multiple ports of the same IP). Of those, 3,176 (21%) had the Streamlit login page enabled, preventing us from viewing any further contents of the application. Another 505 returned status codes that blocked access (404, 502, 504) or application messages indicating some malfunction ( 'AttributeError', 'ModuleNotFound', 'Access denied'). After accounting for those with authentication enabled or other errors, there were still well over ten thousand Streamlit applications granting access to the public.

This number seems to bear out the main thesis of the VentureBeat investigation. Assuming Streamlit has 10% market share, which is very, very generous, 100,000 shadow AI apps looks like a conservative estimate.

In addition to the ten thousand self-hosted apps currently online, there are several times more in the Streamlit Community Cloud. Apps hosted by Streamlit are assigned a unique subdomain of *.streamlit.app. Using Google to search for results on that domain returned 220k results. For a more accurate measurement, we queried our passive DNS data lake for subdomains that have been active in the last 180 days, and there we found 50k domains. Extending that time horizon further into the past (which could also include apps that have since been removed) shows even more.

Impacts of Exposed Shadow AI Apps

While there are far more public apps in Community Cloud, we directed this research project on only the self-hosted instances. In our initial sampling the self-hosted instances were more likely to have sensitive data and would be sufficient to demonstrate the importance of proper access controls. To do so we wrote a script (yes, in Python) to navigate to each IP address, save the page HTML, and take a screenshot. These data capture methods allowed us to search the text for keywords and manually inspect the screen captures for indicators of sensitive data. With ten thousand pages to get through, we were going to have to be efficient.

Personally Identifiable Information

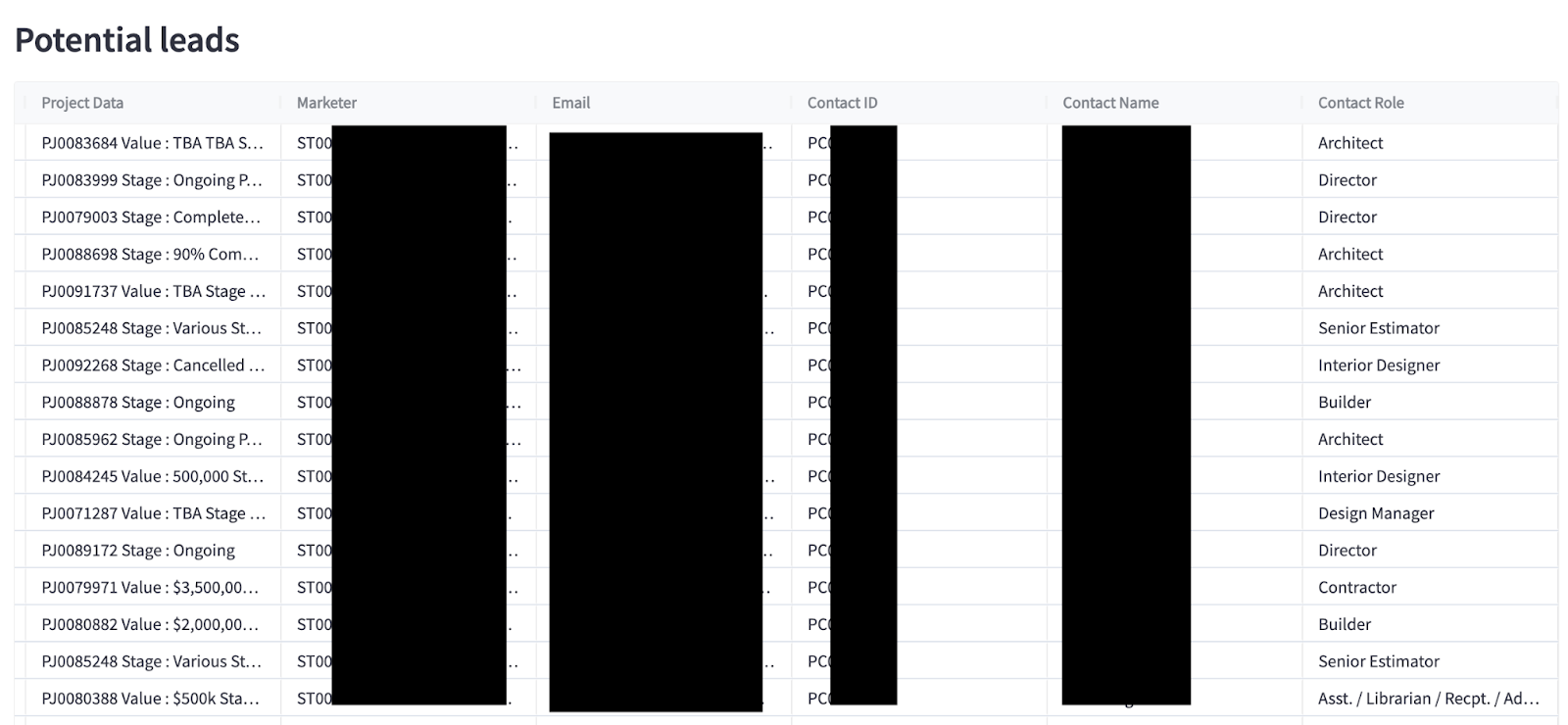

Visually inspecting the applications—flipping through the folder of ten thousand screengrabs—led us to discover some instances used for sales lead tracking, making contact information for thousands of people available to the internet. For example, one CRM used by an Australian architectural supplier included contact information for people at 617 unique companies and the budget and status of their projects. (UpGuard reported this leak to the company and the Australian Cyber Security Centre; it has since been secured).

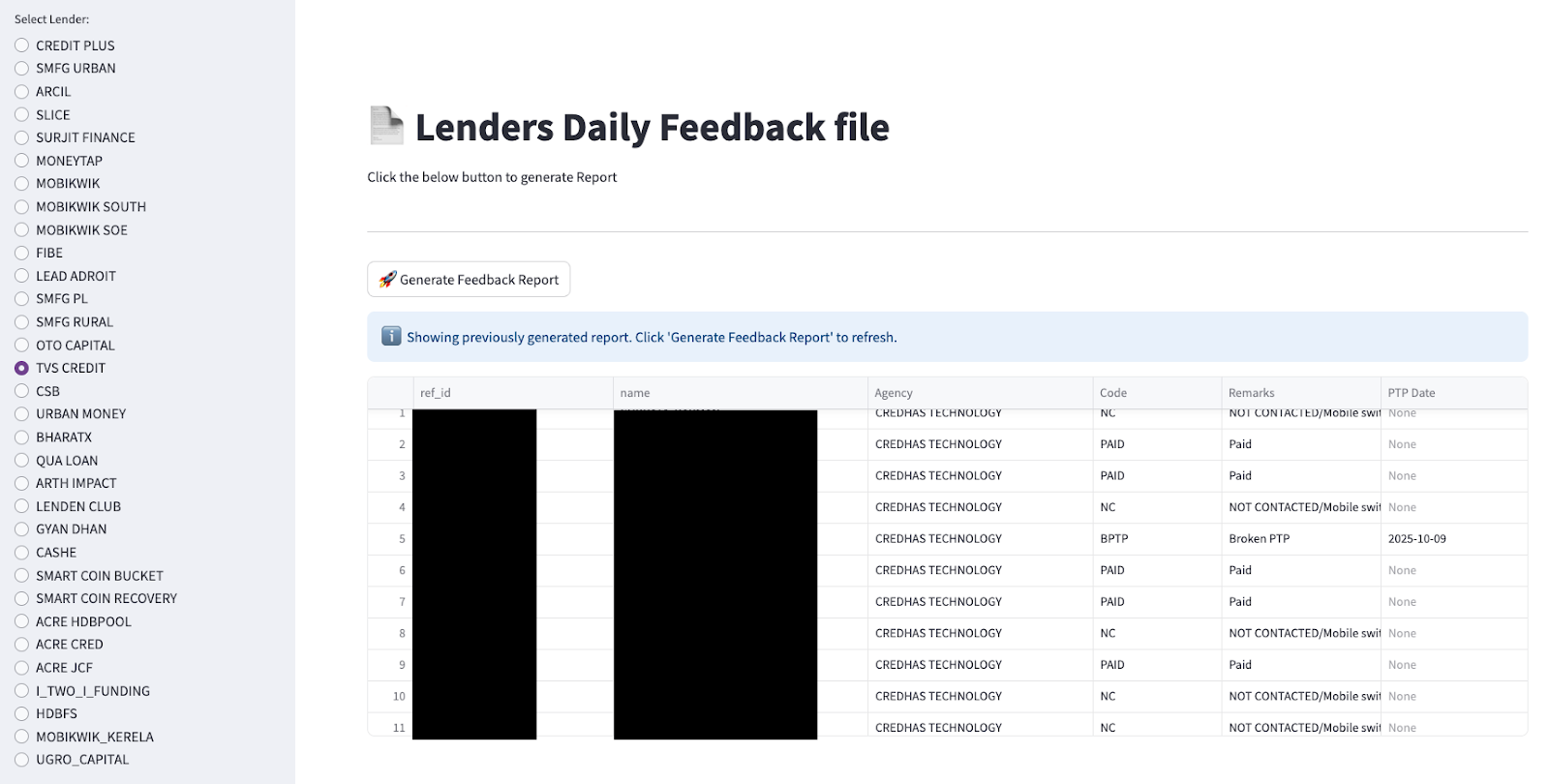

In another case, an Indian digital collections company exposed the interface for reporting on activity across lenders’ cases, including the names of the individuals they were pursuing for payment.

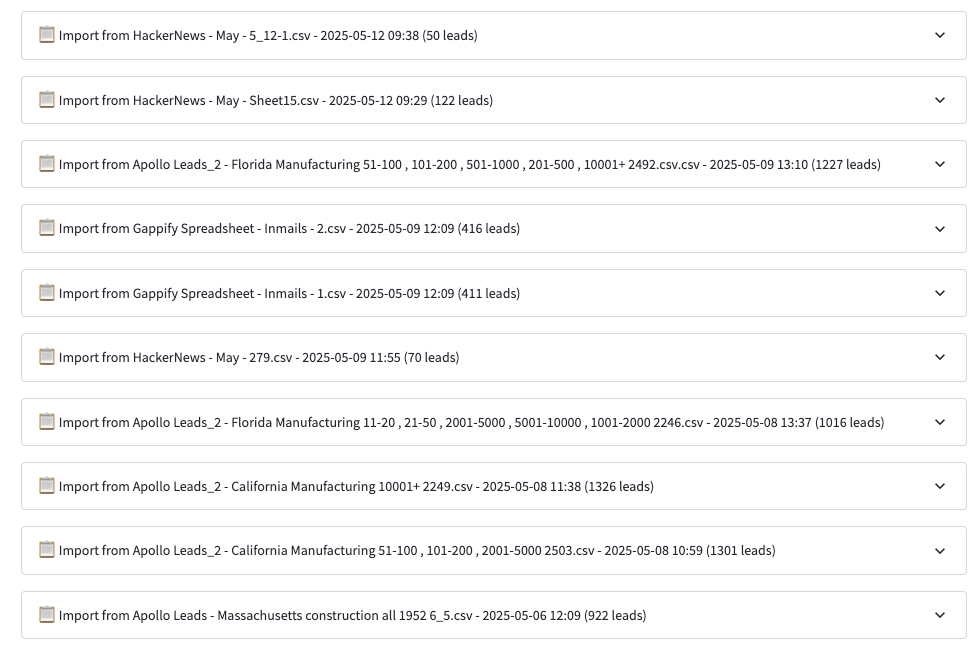

Turning to our collection of saved HTML, we searched for "@gmail.com" to identify pages with some kind of email address. That gave us 95 apps with some contact information; while some of those were personal portfolios, they also included even more lead lists for sales and talent acquisition departments.

Confidential Business Data

While the legal protections for PII make it an obviously sensitive form of data, the more natural fit for Python apps is business intelligence. Searching the text of our scraped HTML pages we found 2,140 pages that included “dashboard,” 284 with “revenue,” 278 with “pipeline,” and 963 with “customer.” Manually reviewing a sample of instances confirmed that real business intelligence data was accessible through these apps.

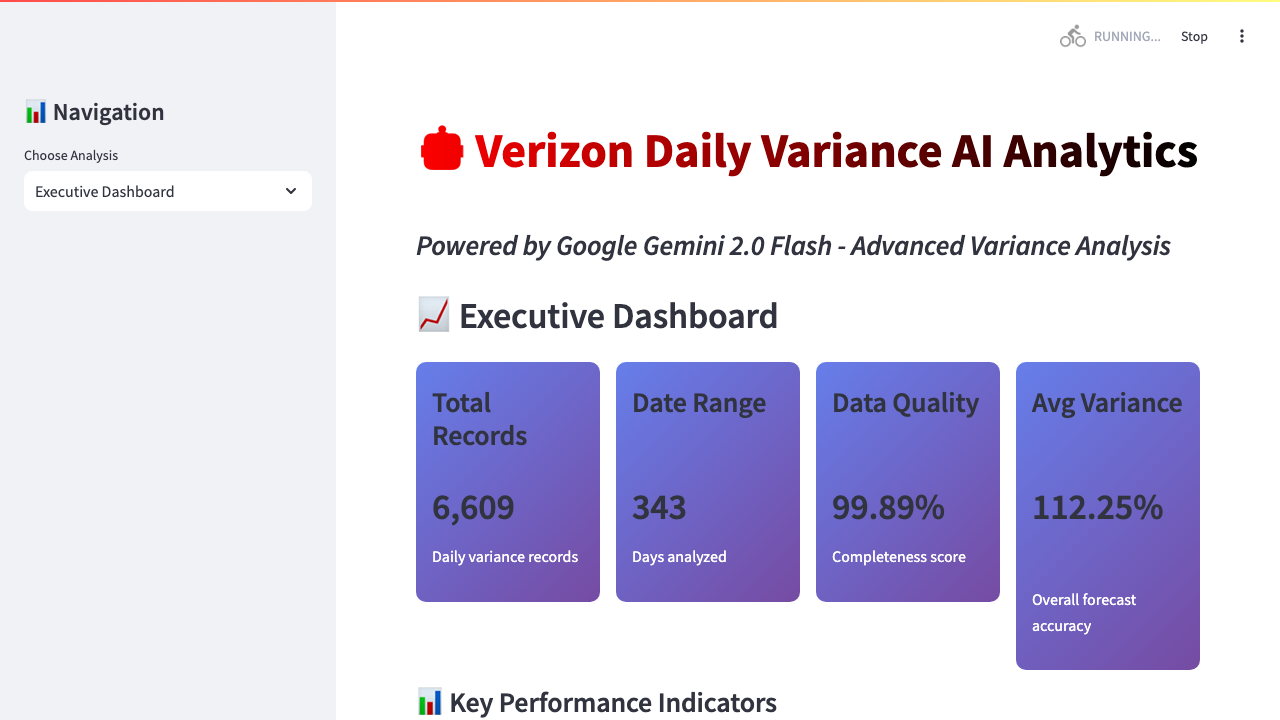

One instance had information about call performance across different Verizon brands running from 2024 to mid-2025. UpGuard notified Verizon and this data is no longer available.

Other dashboards tracked sales performance for various kinds of ecommerce businesses.

Dashboards also included product performance and user engagement metrics.

These data classes do not have the privacy protections of personal information, but revealing them to the world is not ideal. If the data is valuable enough to your business to build a BI dashboard, it’s probably damaging to give it to your competitors.

Recommendations

Streamlit is a powerful tool for making data apps more accessible. As AI makes writing the code for those apps even easier, tools to share it with humans become all the more important. However, as those apps become more valuable to your business and process real customer and performance data, so too does the importance of securing them.

Avoiding data leaks due to insecurely configured Streamlit apps comes down to having an inventory of user-created applications, an inventory of the data assets available to those apps, and appropriate access controls relative to the data assets. Once you know what apps are processing data of some sensitivity, then you need to apply the proper controls.

Apps with sensitive data should not be running in the public Community Cloud. They should be running on infrastructure that your security team can monitor. If they don't need to be accessible to outside parties, limit access to an internal network. Applications with data of any sensitivity should require authentication, but as a rule of thumb, you don't need to overthink this: just enable authentication. You won't regret it.

Managing applications as part of a security program is the ideal. In reality, you also want some kind of detective capability for shadow AI or exposures of your data by third parties. For this, products like UpGuard's User Risk and Threat Monitoring can help to detect unmanaged applications and find applications that might be exposing your data.

Conclusion

Amidst technology booms like the current market for AI, “shovels and picks” products like Streamlit can grow rapidly as they become the complement of choice for the massive number of people trying to build AI apps. Several kinds of risk attach to that growth: first, the expansion of the attack surface in absolute numbers; second, the possibility for that expansion to the “shadow IT” unmanaged by a supervisory function; and third, the platform risk wherein design decisions made by a third party create a more active community at the expense of secure defaults for users.

Streamlit offers all three. Along with the benefit of easily creating web apps comes the risk of, well, easily creating web apps. The more your organization relies upon these apps to process valuable data, the greater the potential impact of exposure. The possibility of misconfiguration cannot be separated from the value of the product, and thus this issue is not a vulnerability to be fixed; it is a risk to be managed indefinitely.

Protect your organization

Related breaches

Student Applications: How an Education Software Company Exposed Millions of Files

By Design: How Default Permissions on Microsoft Power Apps Exposed Millions

Sign up for our newsletter

Free instant security score

How secure is your organization?

.jpg)

.jpg)