Downstream Data: Investigating AI Data Leaks in Flowise

Low-code workflow builders have flourished in the AI wave, providing the “shovels and picks” for non-technical users to make AI-powered apps. Flowise is one of those tools and, like others in its category, it has the potential to leak data when configured without user authentication. To understand the risk of misconfigured Flowise instances, we investigated over a hundred data exposures found in the wild.

About Flowise

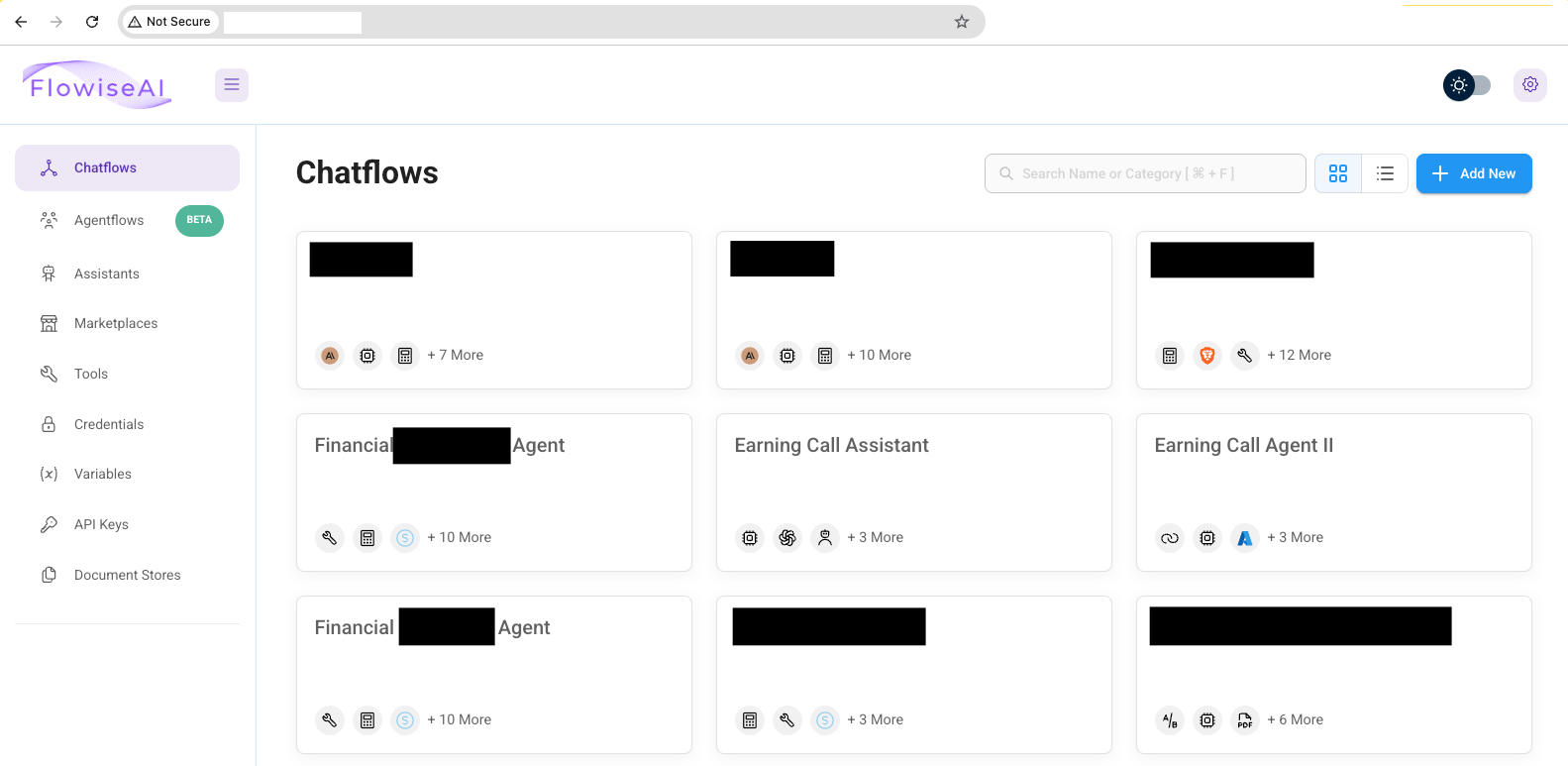

Flowise is a node-based workflow builder for AI apps, with a core “Chatflow” builder, vector document store, credential management, MCP tools, and other auxiliary capabilities. Also like many AI supportive technologies– including those in our series on AI data leaks like Langflow, Chroma, and LlamaIndex– Flowise is both an open source project that can be self-deployed and a company with a commercial offering. While application security design decisions create the potential for misconfigurations, putting those configuration options in the hands of end users makes them more likely.

None of the Flowise instances running on the app.flowiseai.com hostname were missing authentication; those are managed by the Flowise company’s central administrative team whose core competency is running Flowise. Self-hosted instances are the opposite, managed by a multitude of developers whose core competencies are whatever their businesses do. The former are much better at securely configuring Flowise than the latter.

Risks from exposed Chatflows

Unauthenticated access

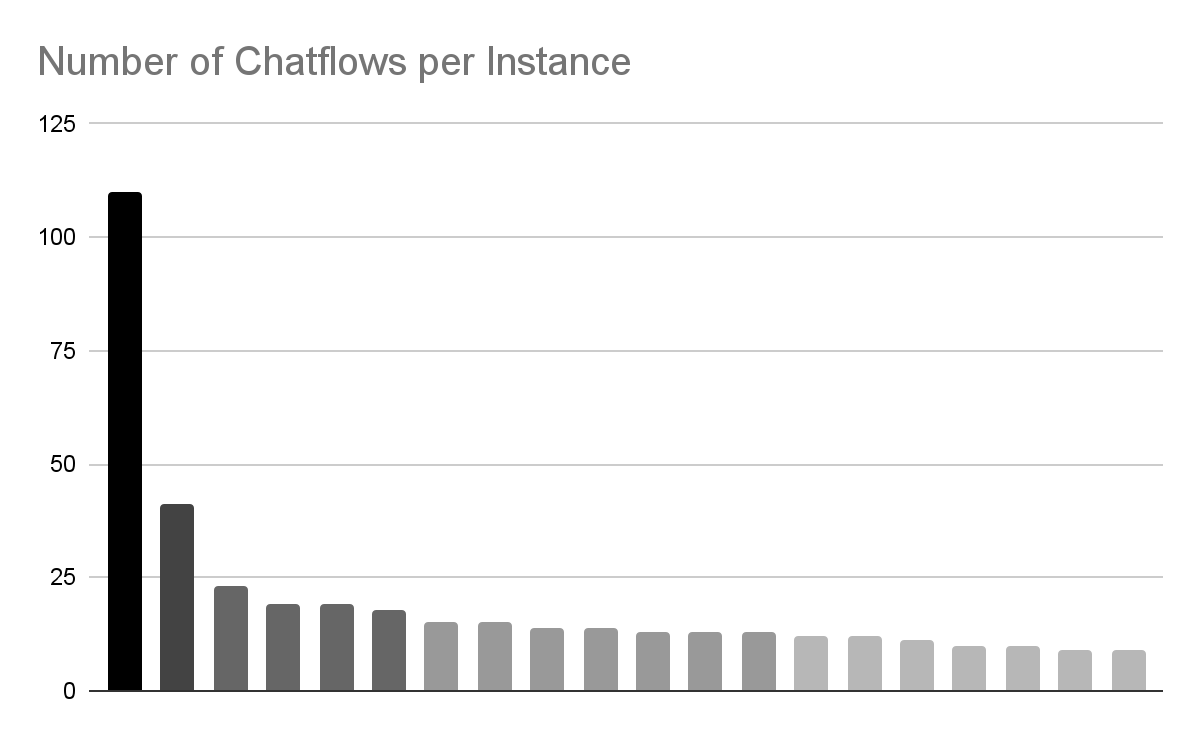

Flowise provides the configuration option for instances to be available to guest users. We tested around 1,000 internet-accessible Flowise instances; of those, 156 allowed unauthenticated access to browse the Flowise instance. Doing so allows one to read the contents of the Chatflows, the business logic of those AI applications.

Of those 156 open instances, 91 had two or more Chatflows, indicating some degree of use beyond the “hello world” step of setting up the system. Some instances had far more Chatflows, allowing us to identify the most promising candidates for further investigation.

Workflow modification

Unauthenticated users can not only read Chatflows, but also modify them (though of course we did not, as that would be unethical). Among the data points that can be modified as a guest user:

- LLM prompts. Users of LLMs know how sensitive these systems are to changes in prompting; even minor modifications could disrupt the functioning of applications using these workflows. Worse, malicious actors could modify the prompts to return toxic content or directions to attacker-controlled resources.

- Models used. While unlikely to result in catastrophic outcomes, changing the model will change the output. Using an untested model will generally provide worse results than the one the application is supposed to use.

- Credentials. Anonymous users could alter the credential sets being used in order to call attacker controlled resources.

- Adding or deleting nodes. Most simply, an attacker can add a node to send data to an attacker-controlled resource. In traditional attacks, the malicious actors would exfiltrate data by injecting code into a website where it would intercept user inputs. When the backend workflow is accessible, they can accomplish the same logical outcome with low-code tools.

Some of the Chatflows we were able to read and modify were used for giving financial advice, weight loss, and booking rental properties, illustrating the contexts in which those technical abilities might be deployed to deceive or harm users.

Other exposed data

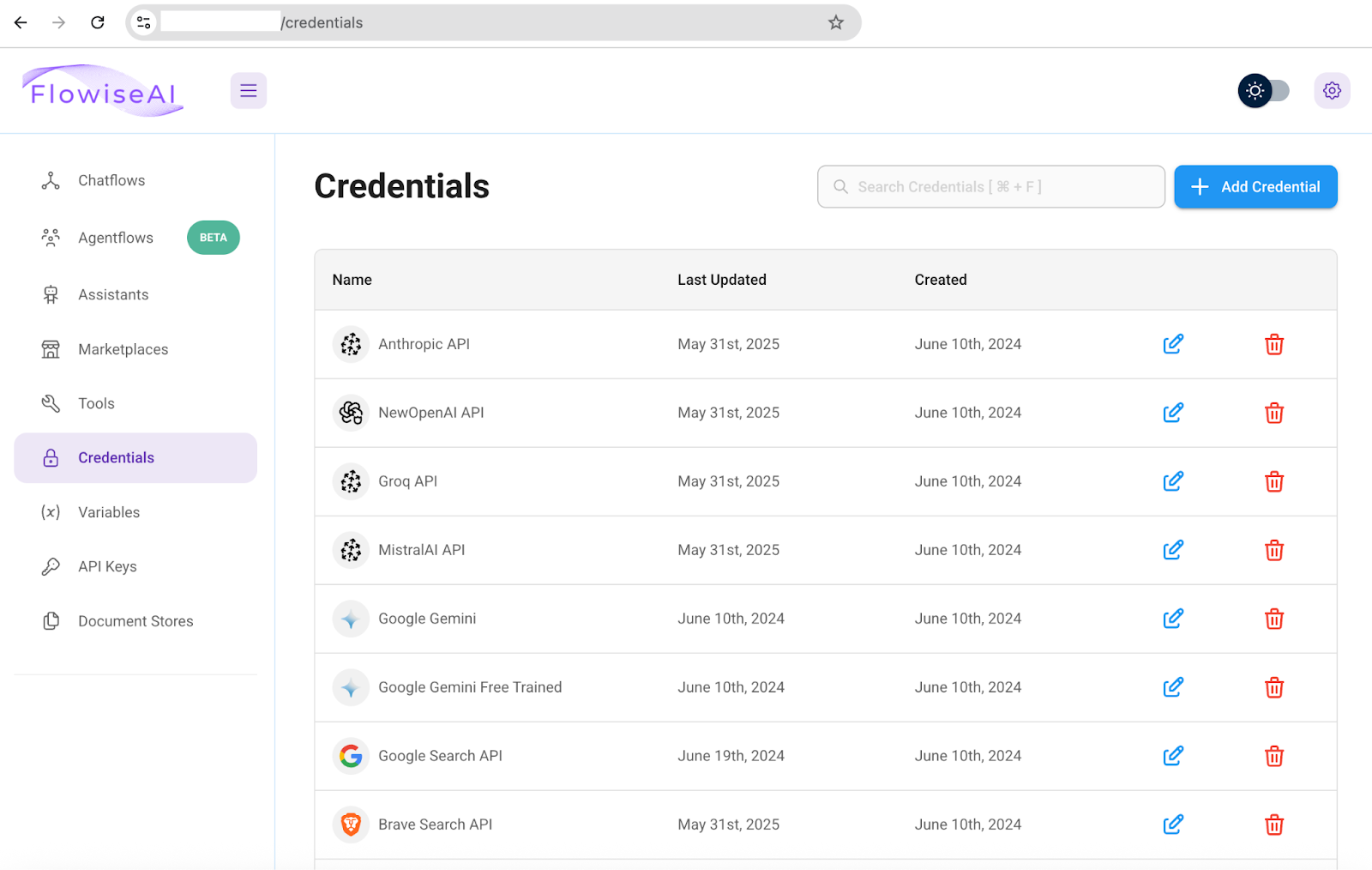

Credentials

The Flowise app has a section for users to save credentials for use in Chatflows. While these credentials are appropriately obfuscated, they are also writable. Being able to see what credentials the Chatflows use allows attackers to create their own credentials for those systems and slot them in as replacements.

That said, users of AI workflow products sometimes add credentials directly into nodes, making them readable as plaintext.

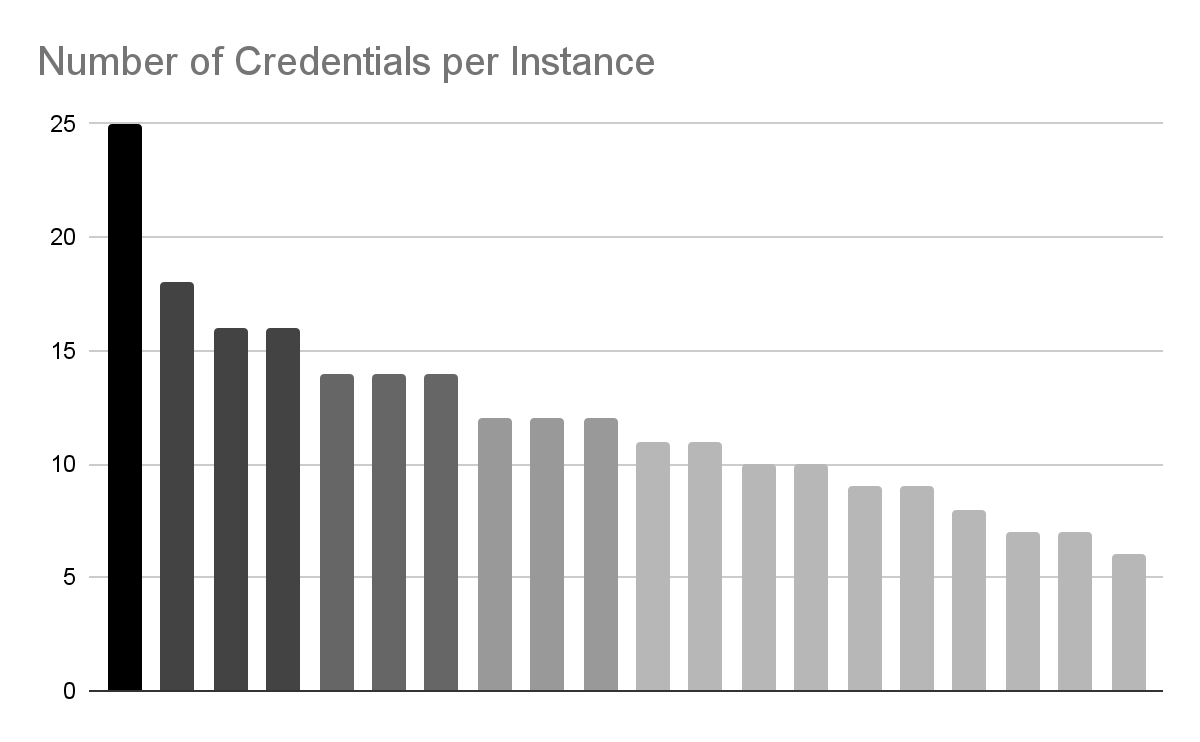

Half of the 158 instances allowing guest access had credentials configured, some with many more. These credentials were most commonly for AI APIs like OpenAI and databases like Pinecone.

API Keys

The API keys section of the Flowise app provides keys for interacting with Flowise programmatically. API keys are readable for guest users. Given that we can modify Chatflows from the UI, exposing these keys does not elevate our privilege, but it does provide an easy path to automate those actions. If we really wanted to collect all the data from an instance with a hundred Chatflows, we would script it using the exposed API key.

Document store

Exposed document stores can be problematic when users have uploaded sensitive information, as we found with Chroma and Langflow. None of the exposed documents found on Flowise apps contained confidential or personal information. In theory, this section of the app could store confidential documents, but in practice we only observed documents that were already public.

Leads

Chatflows have an intriguing option to “view leads.” At face value, this functionality seems likely to leak contact information, but we did not see a single instance with data in this section.

Supply chain risk

Third party risk

In gauging the impact of these exposures, one consideration is the extent to which they impact data privacy for other organizations. This the “if a tree falls in a forest and no one hears it” test for data leaks– there are plenty of insecurely configured software installations, but we really only care about the ones that ultimately affect the confidentiality, integrity, and availability of systems processing protected data.

To that end, we attempted to measure the number of IPs used by real companies compared to those used hobbyist individuals. Flowise appears to have good uptake among would-be suppliers in the AI supply chain. Among the IPs surveyed, 11.6% had hostnames for non-hosting providers, indicating they were set up to have a branded, recognizable web presence. In manually reviewing the names of these providers, they tend to be small AI consultancies, not large established companies. The risk of such misconfigurations is thus less likely to be detectable in an organization’s own attack surface, and more likely to derive from one’s third-party AI vendors.

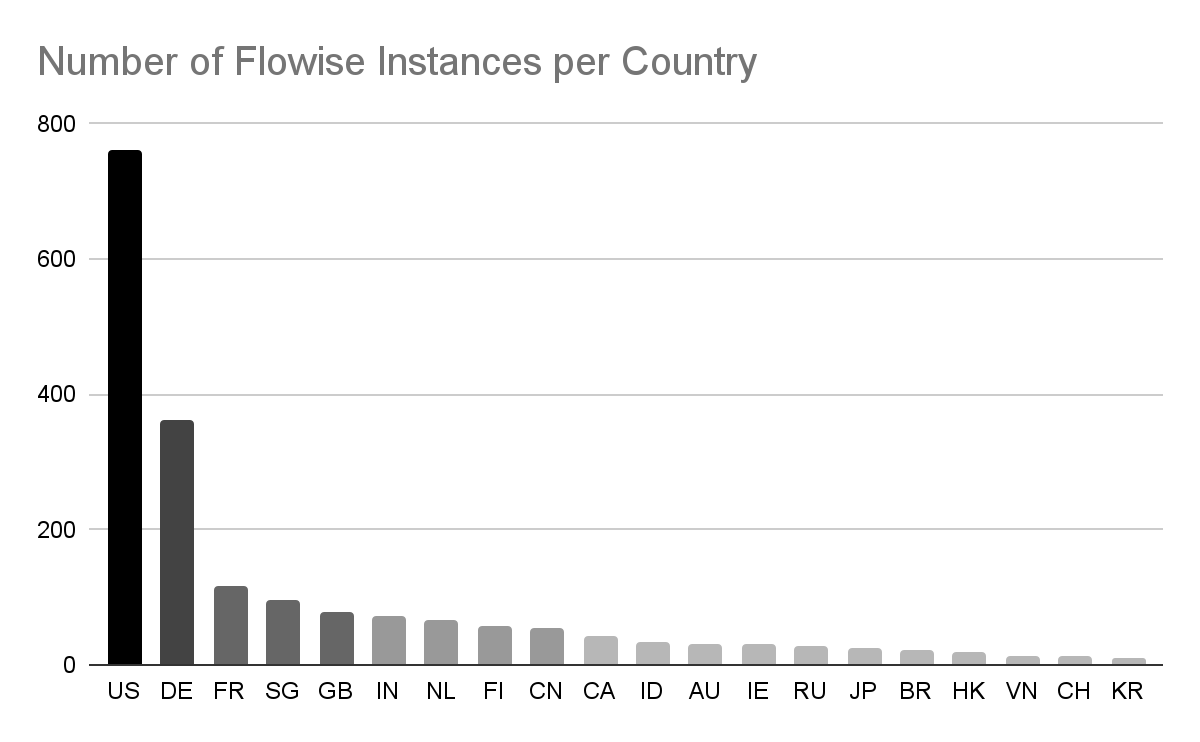

Geographic risk

The risk of expose Flowise instances predominantly affects companies doing business in the US and Europe. While China has substantial internet-accessible AI infrastructure, not all products have equal market penetration there. Flowise is one that is under-represented in China relative to China’s overall IP space.

Conclusion

Misconfigured Flowise instances present a distinct constellation of risk factors: less likely to leak PII, more likely to expose business logic, and more likely to show up in your supply chain than hobbyist technologies. As we observed in our survey of Langflow, part of the value of a managed service is security. In comparing the security of managed versus self-hosted Flowise, the difference is clear.

Users of Flowise should enable authentication to prevent guest users from accessing their accounts. Other best practices include using the product’s credential management tool instead of hard-coding them into nodes, and limiting sensitive information added to document storage. Perhaps more importantly, organizations seeking third-party help with AI adoption need to monitor their supply chain for such misconfigurations, as these issues appear more frequently in smaller, newer companies looking to provide such services.