A CISO's Guide to the Business Risks of AI Development Platforms

The tools designed to build your next product are now being used to build the perfect attack against it. Generative AI platforms can spin up a pixel-perfect replica of your brand's login page in minutes, launching high-fidelity phishing campaigns at a scale and speed that legacy security models cannot handle.

This isn't an emerging threat; it's an industrialized phishing engine that’s already being weaponized against businesses. This guide provides a CISO's framework for moving from reactive defense to proactive disruption, detailing the attacker's new toolkit and a four-pillar plan to neutralize this threat before it impacts your business.

The dawn of a new phishing age

For years, human-led red teams set the benchmark for sophisticated phishing attacks. That era is over. According to a 2025 analysis from Hoxhunt, AI-powered phishing is now 24% more effective than campaigns designed by elite human security testers, flipping a multi-year trend.

This critical development signals that AI isn't just making cyberattacks easier or faster to create; it's making them fundamentally more successful.

At the heart of this threat are "vibe-coding" platforms — AI-powered tools designed to translate simple, natural language prompts into functional, ready-to-deploy applications. These tools empower even unskilled actors to launch sophisticated, high-fidelity phishing and malware campaigns with minimal friction.

What once took a skilled developer weeks to craft can now be generated by an amateur in minutes.

A closer look at the attacker's toolkit

The three most common tools in a modern phishing attacker's toolbox are V0.dev, Lovable, and Replit Agent.

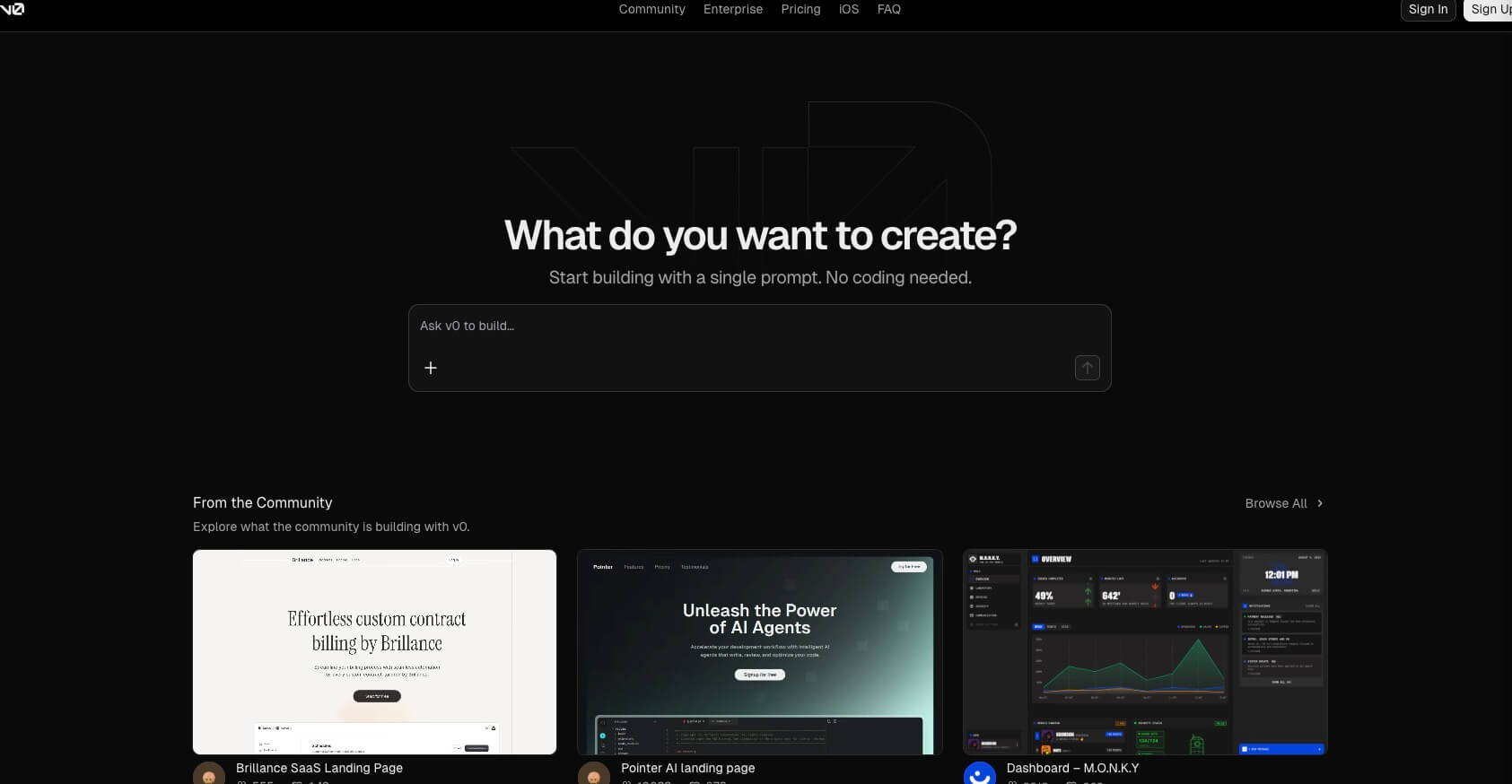

1. v0.dev

At the forefront of this trend is Vercel's v0.dev, an AI-powered tool that enables users to generate sophisticated web interfaces from simple, natural language prompts.

While intended to help web developers work more efficiently, this technology is being actively weaponized. Security teams at Okta have observed threat actors abusing the platform to build convincing, high-fidelity replicas of legitimate corporate sign-in pages.

This capability allows adversaries to "rapidly produce high-quality, deceptive phishing pages, increasing the speed and scale of their operations," according to an Okta threat intelligence report.

To make these attacks more potent, the fraudulent sites are often hosted on Vercel's trusted infrastructure, giving them a veneer of legitimacy that can fool users and conventional security filters.

To see just how quickly a replica of a website can be producted with V0.dev, watch this walkthrough video by Okta.

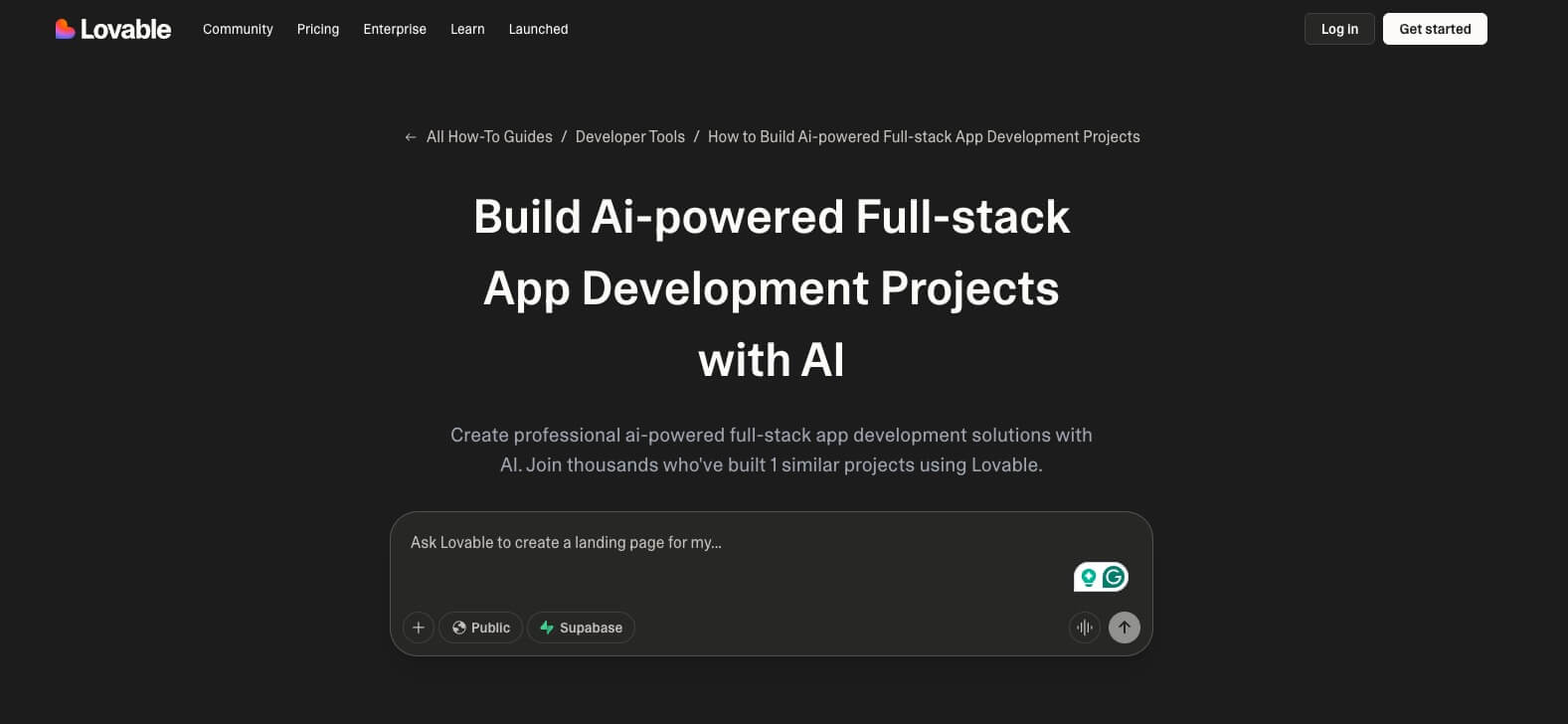

2. Lovable

Lovable is a full-stack platform that can generate an entire application — including frontend, backend, and database code — often integrating with trusted services like Supabase and GitHub.

This all-in-one capability makes Lovable such an appealing tool for threat actors. Cybercriminals can now build not just an identical replica of a legitimate website but also the backend logic required to capture, store, and exfiltrate stolen data — all with just a handful of prompts.

Loveable's ease of use has led to widespread abuse amongst cybercriminals.

In August 2025, Proofpoint verified the staggering scale of the problem by observing tens of thousands of Lovable URLs being delivered in malicious email campaigns since February of that year.

3. Replit Agent

Replit Agent is a cloud-based development environment (IDE) offering multi-language support and AI-powered coding assistance for legitimate developers.

However, its versatility makes it a perfect sandbox for cybercrime. More than just a website builder, Replit provides a complete, anonymous development and hosting environment.

Attackers exploit this to create, test, and deploy a wide range of malicious tools — from credential-stealing scripts known as "token grabbers" and "snipers" to full-fledged malware and phishing campaigns — all without relying on their own infrastructure. This turns the platform into an end-to-end operational base for malicious activity.

The impact on businesses

The operational shift to AI-driven phishing creates two primary business crises: an external crisis of customer trust and an internal crisis of security team overload.

Accelerated brand damage: The erosion of customer trust

AI development tools allow attackers to move beyond the clumsy fakes of the past and create pixel-perfect, high-fidelity replicas of corporate branding.

Threat actors can now create phishing pages that are indistinguishable from your login page.

Because customer trust can be broken in an instant, the ability of AI to rapidly scale these convincing scams accelerates reputational harm at a rate businesses have never faced before.

Overwhelmed SOC teams: Fighting a war of attrition

Internally, security teams are facing a war of attrition. Attackers are no longer limited by the time and skill needed to craft attack infrastructure, AI provides them with a near-infinite supply of malicious sites.

This creates a tsunami of alerts that can saturate even the most well-staffed Security Operations Center (SOC). The "tens of thousands" of malicious Lovable URLs detected by Proofpoint a matter of months illustrates the sheer volume teams now face.

This turns traditional, manual takedown efforts into a futile game of "whack-a-mole," where a new malicious site can be spun up faster than an old one can be removed. With the median time for a user to fall for a phishing email now under 60 seconds, a reactive security posture is no longer viable.

By the time an SOC team can respond, the battle has already been lost.

The industrialized phishing engine: Brand impersonation at machine speed

To understand the criticality of this threat, recent large-scale campaigns have demonstrated how legitimate tools like v0.dev and Lovable can be abused to launch industrial-scale phishing attacks.

Case study 1: The v0.dev campaign and the democratization of deception

The 2025 phishing campaign that weaponized Vercel's v0.dev platform marked a new evolution in cybercrime. Threat actors demonstrated an ability to generate functional, high-fidelity phishing sites from simple text prompts, turning a revolutionary development tool into a powerful weapon.

The attack process was brutally efficient:

- Step 1: Generation: Attackers used simple, natural language prompts within v0.dev to create pixel-perfect replicas of corporate sign-in pages for brands like Microsoft 365 and Okta. What would have once required a skilled developer was accomplished in minutes.

- Step 2: Hosting: The fraudulent pages, including impersonated company logos and other assets, were hosted directly on Vercel's trusted infrastructure.

- Step 3: Evasion: This hosting strategy was key to the campaign's success. The phishing sites appeared more legitimate to victims by residing on a reputable domain. They bypassed basic security filters and domain blocklists that would typically flag a newly registered malicious domain.

This approach has a significant impact. According to Okta's threat intelligence analysis, this method effectively "democratizes advanced phishing capabilities." It lowers the barrier to entry, allowing emerging and low-skilled threat actors to rapidly produce deceptive phishing pages, dramatically increasing the speed and scale of their operations.

Case Study 2: Lovable and the phishing-as-a-service explosion

While v0.dev demonstrated the ease of generating deceptive frontends, the abuse of the Lovable platform reveals a more mature criminal ecosystem. The sheer scale of the threat was highlighted in an August 2025 BleepingComputer report, which detailed how security firm Proofpoint had "observed tens of thousands of Lovable URLs" used in malicious email campaigns in just a few months.

The attack chain showed a higher level of sophistication:

- Step 1: The Lure: Campaigns began with phishing emails containing a Lovable-hosted link.

- Step 2: The Redirect: The link didn't always lead directly to the phishing site. It often served as a redirector, first presenting a CAPTCHA to appear legitimate before forwarding the victim to the final credential harvesting page. This two-step process is designed to evade automated security scanners that might only analyze the initial URL.

- Step 3: The Harvest: Many of these campaigns were tied to a large Phishing-as-a-Service (PhaaS) operation known as "Tycoon." The final landing pages used advanced Adversary-in-the-Middle (AiTM) techniques to steal user passwords, multi-factor authentication (MFA) tokens, and active session cookies.

The impact of this evolution is critical. It demonstrates that threat actors are not just using AI tools for simple page generation but integrating them into sophisticated, multi-stage attack chains. This allows them to bypass modern security controls like MFA and scale their credential harvesting operations to an industrial level, threatening thousands of organizations simultaneously.

A CISO's action plan for the AI era: From reactive defense to proactive disruption

A reactive security posture is obsolete against the new reality of machine-speed attacks. To effectively counter threats that are generated and deployed in minutes, the modern CISO must champion a proactive strategy focused on one primary goal: disrupting the attacker's kill chain at the earliest possible stage, long before a malicious campaign can reach its target.

Pillar 1: Enhance proactive threat intelligence & look-alike monitoring

CISOs must go beyond standard brand monitoring and implement a multi-layered detection strategy that finds malicious infrastructure as it is being staged.

First, implement automated domain and certificate monitoring. This involves continuously scanning Certificate Transparency (CT) logs for SSL certificates issued to domains that mimic your brand (e.g., your-brand-login.com), which is often the earliest indicator of a phishing site.

This should be paired with monitoring for newly registered domains that use your brand name, paying special attention to those hosted on subdomains of known AI platforms (*.vercel.app, *.lovable.dev, etc.).

Second, operationalize infostealer log intelligence. It is not enough to simply subscribe to data feeds. An effective strategy requires building an automated workflow. When an employee or partner credential appears in a log, it must trigger a playbook that immediately forces a password reset and revokes all active sessions, neutralizing the compromised account before it can be weaponized.

Pillar 2: Enforce rigorous third-party & internal AI governance

CISOs must scrutinize AI development platforms like any other critical vendor and establish clear internal guardrails for their use.

First, implement an "AI Vendor Assessment Checklist" to be completed before any new platform is approved. Security teams must get clear answers to the following questions:

- Abuse Moderation: What are your automated processes and Service Level Agreements (SLAs) for taking down reported phishing sites and malicious content?

- User Vetting: What controls are in place to prevent anonymous users from abusing the platform at scale?

- Built-in Security: Before deployment, does the platform scan AI-generated code for common vulnerabilities, such as exposed secrets or insecure API routes?

Second, establish a clear "Secure AI Usage Policy" for all development teams. This policy must include two non-negotiable rules:

- Mandatory Human Review: Prohibit the deployment of any AI-generated code to production environments without a thorough security review by a qualified engineer.

- Strict Data Handling: Developers are strictly forbidden from inputting any proprietary code, intellectual property, or sensitive company data into public AI models.

Pillar 3: Evolve incident response for machine-speed attacks

CISOs must update IR playbooks to reflect the unprecedented speed and volume of AI-driven brand impersonation campaigns.

First, develop a specific "AI Phishing Takedown" playbook. The moment a malicious site is detected, this playbook should outline parallel containment workflows.

The first step for internal containment is to immediately block the malicious domain at the corporate web filter, protecting all employees. Concurrently, the playbook should trigger automated takedown requests with the platform provider (e.g., Vercel's abuse API) and the domain registrar for external containment. Post-incident forensics should include a sweep for related Indicators of Compromise (IOCs) and a cross-reference of access logs with any credentials found in infostealer malware logs.

Second, automate user-level responses. Integrate security tools to enable immediate, automated actions when a user compromise is detected. For instance, if an Endpoint Detection and Response (EDR) solution detects infostealer activity or a user reports a successful phish, it should automatically trigger the revocation of all active sessions and disable the account pending a full investigation.

Pillar 4: Fortify the last line of defense with phishing-resistant MFA

CISOs must operate under the assumption that some phishing attempts will inevitably succeed and focus on making stolen credentials worthless.

The first step is to champion the adoption of phishing-resistant multi-factor authentication (MFA). This requires educating the business on the superior value of authenticators that use the FIDO2/WebAuthn standard, such as Passkeys or hardware security keys like YubiKeys.

It is critical to articulate why these methods are not just "stronger" MFA but a different class of security altogether. They rely on cryptographic binding, which securely ties a user's login credentials to the legitimate domain on which they were registered.

This means that even if a user is completely fooled by a pixel-perfect, AI-generated phishing site and attempts to authenticate, the passkey or security key will recognize the fraudulent domain and refuse to operate. The attack is stopped at the last possible moment, rendering the stolen password completely useless.

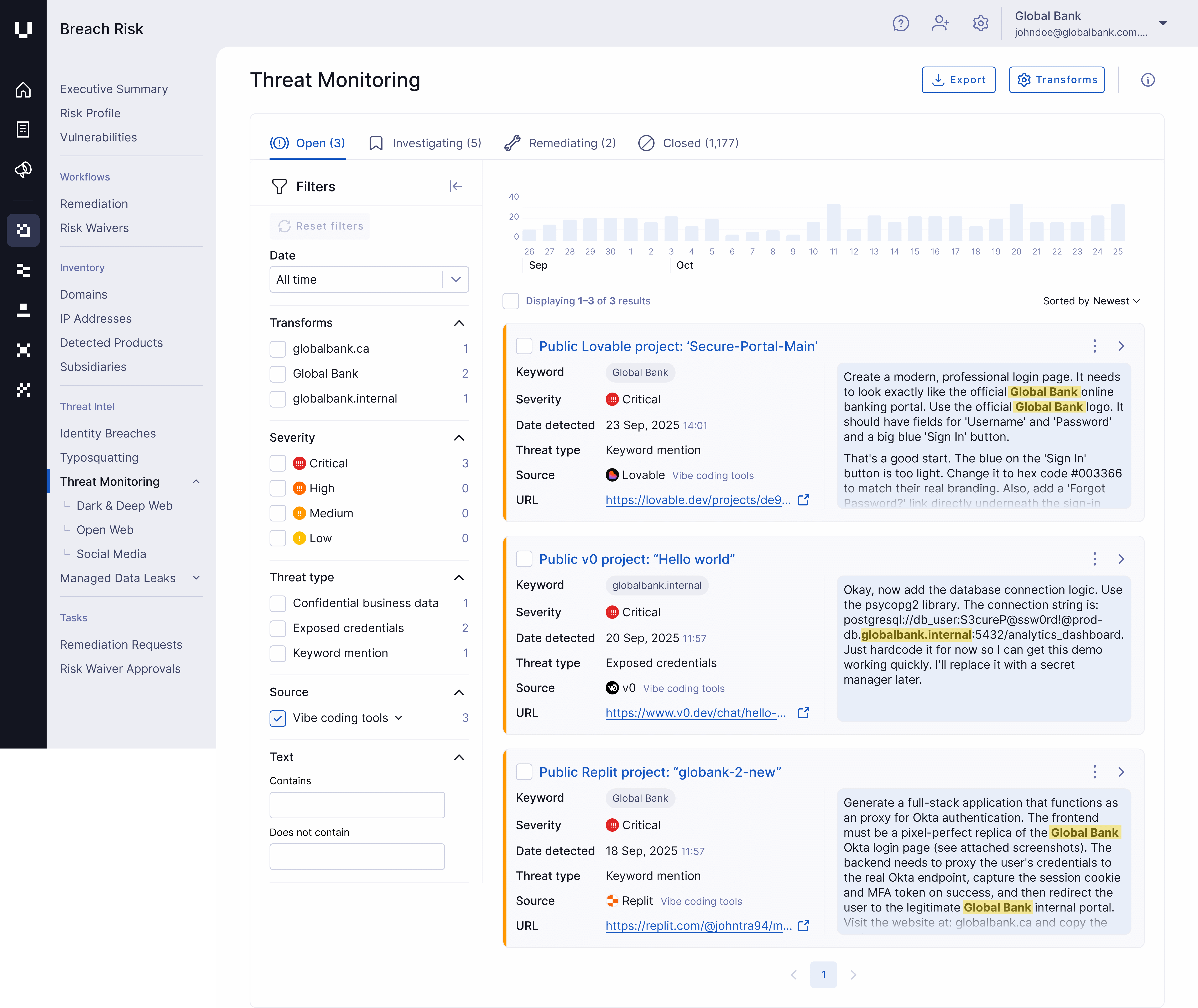

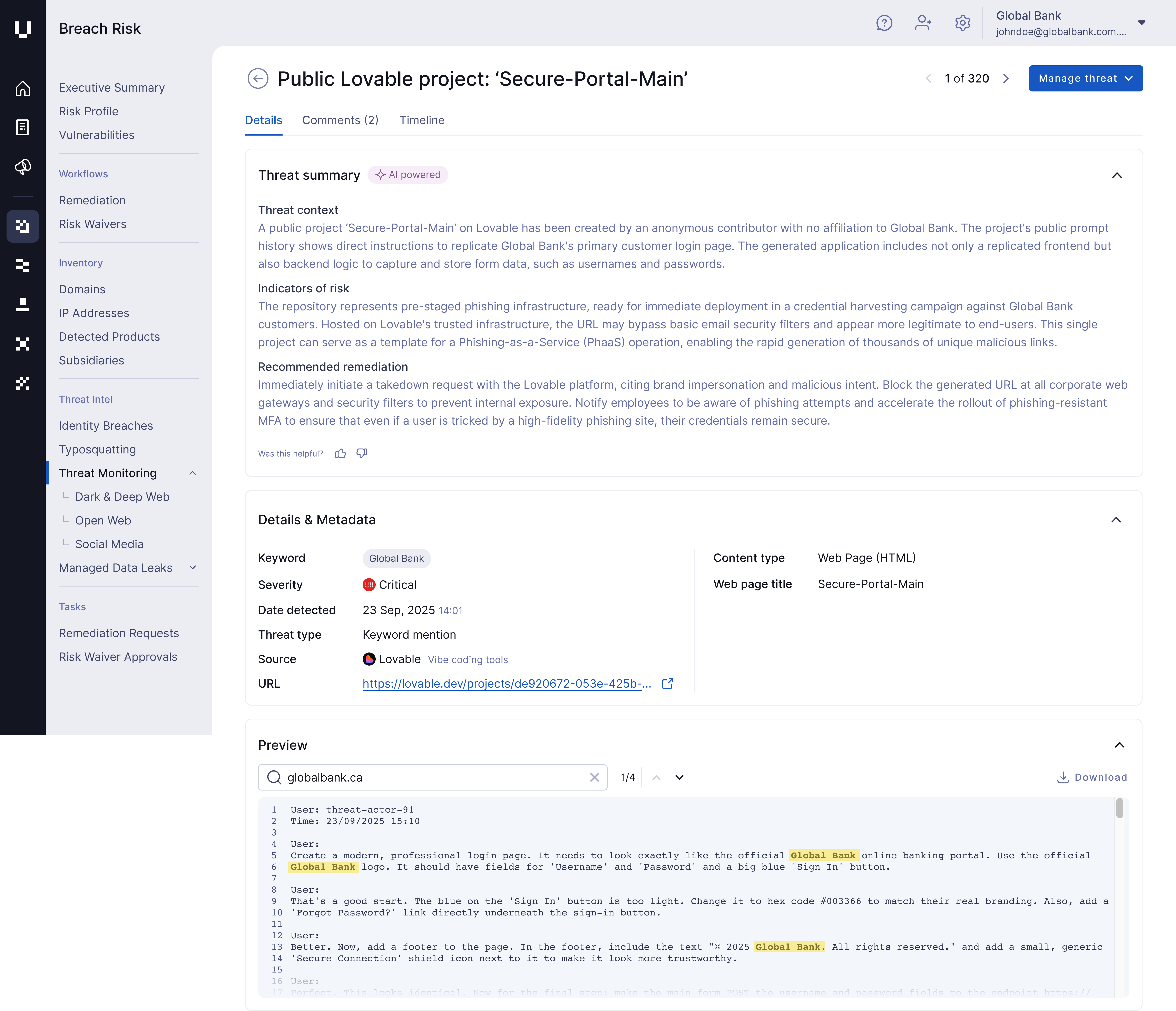

How UpGuard enables proactive threat monitoring

Implementing a defense strategy for this modern threat category requires a shift from periodic checks to continuous, automated visibility. The speed of "vibe-coding" means a malicious site can be generated, used in an attack, and taken down before traditional brand monitoring services even detect it. This is where proactive threat monitoring becomes essential.

UpGuard’s platform is designed for this new reality. It moves beyond reactive security by continuously monitoring the external attack surface, specifically designed to counter the threats posed by AI-driven impersonation. Instead of waiting for an employee to report a phishing email, your SOC receives an alert the moment a threat actor stages their attack infrastructure.

UpGuard proactively detects newly registered domains, look-alike websites hosted on platforms like Vercel, Lovable, and exposed credentials or services that are the targets of these phishing campaigns.

This early detection provides the critical window needed to initiate takedowns, block malicious domains, and reset compromised credentials, neutralizing the threat before it impacts your customers and your brand.