Cyber threat monitoring is critical for effective cybersecurity, yet most organizations operate with gaping blind spots. In this post, we shed some light on the limitations of conventional approaches to help you achieve comprehensive visibility of your attack surface.

Why organizations need real-time visibility

Historically, threat detection was primarily reactive, relying on signature-based tools and rule-based systems to find known patterns of malicious activity in network logs and files. This approach was effective when data sets were smaller and threats were well-documented, but it struggled to keep pace as cyber threat data complexity and volume exploded.

The introduction of machine learning (ML) and deep learning to cybersecurity programs injected new energy into detection strategies, enabling security teams to identify cyber threat patterns accurately and with the speed necessary to keep up with modern cyber attack methods.

Today, the latest evolution of cyber threat monitoring is being shaped by Artificial Intelligence, particularly Large Language Models (LLMs). This latest round of advancements is changing detection capabilities in several ways:

- Adding Context to Detection: While earlier ML models could flag a file as malicious, they couldn't explain why. LLMs, trained on diverse information including unstructured threat intelligence reports, can now provide crucial context behind a decision, delivering a more informed response rather than just a binary alert. As a result, cyber threat remediation actions are now more targeted and being deployed faster than ever.

- Comprehending Complex Data: LLMs have demonstrated a surprising ability to understand and identify malicious activity within data formats that are not traditional prose. This includes log files, code, JSON, and even malware hashes, significantly expanding the scope of data that can be automatically analyzed for cyber risks.

This shift from looking for known signatures to analyzing behaviors and understanding context allows security teams to identify and even anticipate unknown threats before they can cause significant damage.

The high cost of dwell time

In cybersecurity, time is the attacker's most valuable resource. The longer they remain undetected within a network, the more damage they can inflict. The security industry has made significant progress in reducing attacker dwell time over the last decade, from 16 days in 2022 to just 10 days in 2023. But while this may seem like an impressive improvement, 10 days is still an eternity for cybercriminals, especially for those who have bolstered their attack techniques with Al technology.

The critical stages of a cyber attack, such as data exfiltration via an SQL injection or a "smash and grab" ransomware deployment, can now occur in just minutes or hours, making even a multi-day dwell time far too long to prevent significant financial damage.

The challenges of a modern dynamic attack surface

The widespread adoption of cloud-based architecture, the permanence of remote work, and the rise of dynamic virtualized assets have dissolved traditional network boundaries, creating a perimeter-less reality that introduces new and complex risks.

The attack surface now extends far beyond the corporate office, encompassing cloud misconfigurations, insecure home networks, and highly transient virtual assets that are difficult to track.

This expanded, dynamic attack surface creates significant monitoring challenges that legacy security tools were not designed to handle:

- Visibility Gaps in IaaS/PaaS: Effective cloud environment monitoring requires enabling and centrally collecting multiple log sources — such as network traffic logs, storage access logs, and audit logs — but the quality and availability of this data can depend heavily on the organization's specific cloud subscription level.

- Securing Unmanaged Devices: With remote and hybrid workforces, the risk shifts to individual users and their endpoints. Corporate credentials can be compromised on personal or contractor devices used for work, especially if those devices are also used in Shadow IT or Shadow SaaS practices. Securing these unmanaged "Bring Your Own Device" (BYOD) assets is a major challenge, as organizations cannot enforce security controls on systems they do not own.

- Tracking Transient Virtual Assets: Modern cloud-native environments increasingly use ephemeral workloads, which are transient by nature and may exist for only a few minutes, for example, an ephemeral container used for troubleshooting secure, minimal "distroless" applications in Kubernetes. Because these assets are so short-lived, traditional security scanning or agent-based monitoring may miss them entirely, creating blind spots where an attacker could execute commands or exfiltrate data without leaving a persistent footprint.

The rising adoption of generative AI solutions amongst third-party vendors creates particular monitoring challenges, especially in Shadow IT. Watch this video to learn more.

Compliance and reputational risks

Beyond the immediate technical challenges, inadequate threat monitoring exposes organizations to severe business risks, starting with regulatory non-compliance.

A growing number of global and industry-specific frameworks — such as GDPR, HIPAA, PCI DSS, DORA, and CIRCIA — mandate or strongly imply the need for continuous monitoring to protect sensitive data. However, with attackers now leveraging AI, legacy monitoring approaches cannot effectively monitor for emerging cyber threats, making compliance with these regulations increasingly difficult.

Failure to sharpen monitoring capabilities with modern strategies leads to significant fines and erodes brand trust, a primary driver of data breach costs due to customer turnover and reputation damage.

Critical methods to identify cyber threats

Understanding the need for real-time visibility is the first step to improving cyber threat monitoring. The next step is implementing the proper methods to achieve it. Moving from theory to practice requires adopting proactive, advanced techniques that align with the realities of the modern cyber threat landscape.

You need to shift your focus from merely defending the IT perimeter to actively hunting for threats that may already be operating inside.

This new and improved approach to cyber threat monitoring can be implemented with the following strategies:

1. Adopt an "assume breach" mindset

It's time to finally abandon the outdated "castle-and-moat" security model in favor of Zero-Trust principles based on an "assume breach" mindset.

According to the traditional "castle-and-moat" approach, anyone inside the network is trusted by default. The critical flaw in this strategy is that once an attacker crosses the "moat" — whether through stolen credentials, malware, or a social engineering attack — they transition into a trustworthy status, which grants them free access to internal applications and sensitive data.

In contrast, a modern Zero Trust security framework operates on the core principle of "Never Trust, Always Verify." This approach starts with the assumption that a breach has already occurred and that security risks are present both inside and outside the network.

No user, device, or connection is trusted by default, regardless of location. Instead, Zero Trust requires users to continuously verify their identity with every attempt to access sensitive resources.

An assume breach mindset is an ever-present philosophy, immediately implemented at the onboarding stage, where a user's level of system access is the minimum required to perform their duties, also known as the Principle of Least Privilege.

An assume breach mindset will also naturally pivot your cybersecurity strategy towards enhanced focus on mitigating insider threats, preparing the ground for a human cyber risk management program.

2. Monitor the dark web

An "assume breach" mindset requires proactive intelligence-gathering outside your network. A critical source for this is the dark web, which hosts thousands of illicit marketplaces and forums where sensitive corporate information is often traded or leaked following a breach.

Modern cyber threat monitoring involves the continuous, automated scanning of these sources — including ransomware blogs, forums, credential dumps, and encrypted messaging platforms like Telegram — to find intelligence relevant to your organization's digital footprint, such as:

- Leaked corporate or employee credentials.

- Exposed sensitive customer data, like the private medical records leaked in the Medibank cyber attack.

- Mentions of your brand or executives.

- The sale of proprietary company data or intellectual property.

The primary value of dark web monitoring is its function as an early warning system of an impending breach. Many organizations struggle with this aspect of cyber threat monitoring. As a result, their compromised sensitive data circulates across dark web marketplaces for weeks or even months without their knowledge.

The negative implications of dwell time extend to leaks on the dark web. Credentials posted in a data dump can be harvested and used in credential stuffing attacks within hours. By detecting this exposure in near real-time, security teams can take preemptive action — such as resetting compromised passwords or notifying affected users — before the information can be weaponized in an active attack, turning a potential crisis into a manageable security task.

For helpful insights on detecting breach signals before it's tool late, watch this webinar.

3. Address human cyber risks

While external threats are a major concern, the human element remains a primary factor in security incidents. Some studies show human error is a cause in up to 95% of breaches. Modern threat monitoring looks inward to address this, using User and Entity Behavior Analytics (UEBA) to identify internal threats.

UEBA is a type of security software that uses machine learning and behavioral analytics to understand what is "normal" within an IT environment. UEBA solutions create a baseline picture of how users and entities (such as servers, routers, and applications) typically function by ingesting and analyzing data from multiple sources. The system then continuously monitors and flags dangerous deviations from this baseline in real-time, such as sudden mass data downloads, unusual login times, or attempts to escalate privileges.

This focus on behavior makes UEBA uniquely effective at identifying two critical types of insider threats that often bypass traditional security tools:

- Malicious Insiders: These are authorized employees or contractors attempting to steal data or cause harm. Because their access is legitimate, traditional tools may not flag their activity. UEBA, however, can identify a user violating security policies or accessing data inconsistent with their role, even if they have the credentials to do so.

- Compromised Accounts: Occurs when an external attacker steals a legitimate user's credentials via phishing or other means. The attacker's activity appears authorized to the network, allowing them to move laterally and escalate their privileges. UEBA can detect this by spotting anomalous behaviors that deviate from the legitimate user's established baseline, such as logging in from an unusual IP address, accessing new systems, or downloading abnormal amounts of data.

UEBA aids security teams in detecting and responding to the human vulnerabilities that cause most security breaches.

Ultimately, deploying a tool like UEBA is a core component of a broader human cyber risk management strategy. By providing deep visibility into how user identities interact with applications and data, UEBA helps security teams detect and respond to the human vulnerabilities — malicious or accidental — at the root of most security breaches.

Note: Deploying a tool like UEBA isn't a standalone solution to addressing human cyber risks. It should be considered a component of a broader human cyber risk management strategy.

Watch this video to learn how to approach human cyber risk management holistically:

4. Leverage network traffic analysis (NTA)

Analyzing data flows and packet metadata with Network Traffic Analysis (NTA) can reveal hidden anomalies that traditional firewalls might miss. By monitoring east-west (internal) traffic, not just north-south (inbound/outbound), security teams can identify malicious patterns that indicate an active compromise.

Key patterns NTA can detect include:

- Ransomware: A sudden surge in east-west traffic showing rapid file access and encryption across multiple servers strongly indicates a ransomware attack in progress.

- DDoS Attacks: NTA can spot the anomalous spikes in UDP, ICMP, or SYN packets targeting specific services that characterize a Distributed Denial-of-Service attack.

- C2 Communication: NTA is crucial for detecting the subtle "beacons" or "heartbeats" that malware sends to external command-and-control (C2) servers, a common tactic for maintaining persistence and receiving instructions.

5. Automate endpoint detection and response (EDR/XDR)

Modern Endpoint Detection and Response (EDR) platforms go far beyond legacy antivirus by focusing on malicious behavior rather than just known file signatures. This behavioral approach allows them to detect advanced threats like fileless malware and malicious PowerShell scripts that traditional tools often miss. EDR solutions continuously record activities and events on endpoints like laptops and servers, providing security teams with the visibility needed to uncover stealthy attacks.

A key advantage of modern EDR and Extended Detection and Response (XDR) platforms is their use of automation to accelerate response. When a threat is detected, the EDR tool can automatically contain a compromised endpoint by isolating it from the network. This swift, instantaneous action stops an attack from spreading and significantly reduces the manual triage workload for the Security Operations Center (SOC) team, enabling faster and more precise remediation.

4. Incorporate AI-driven threat Intelligence

The sheer volume of security logs a modern enterprise generates makes manual analysis impossible. Artificial intelligence is now an essenital aid for parsing through these enormous data repositories to identify threat patterns accurately and at scale.

Large Language Models (LLMs) can comprehend and analyze a wide variety of formats beyond simple text, including log files, code, scripts, and JSON data. With the capability of working with a broader data context and significantly faster processing speeds, AI technology is the key to significantly reducing dwell time and its associated damage costs.

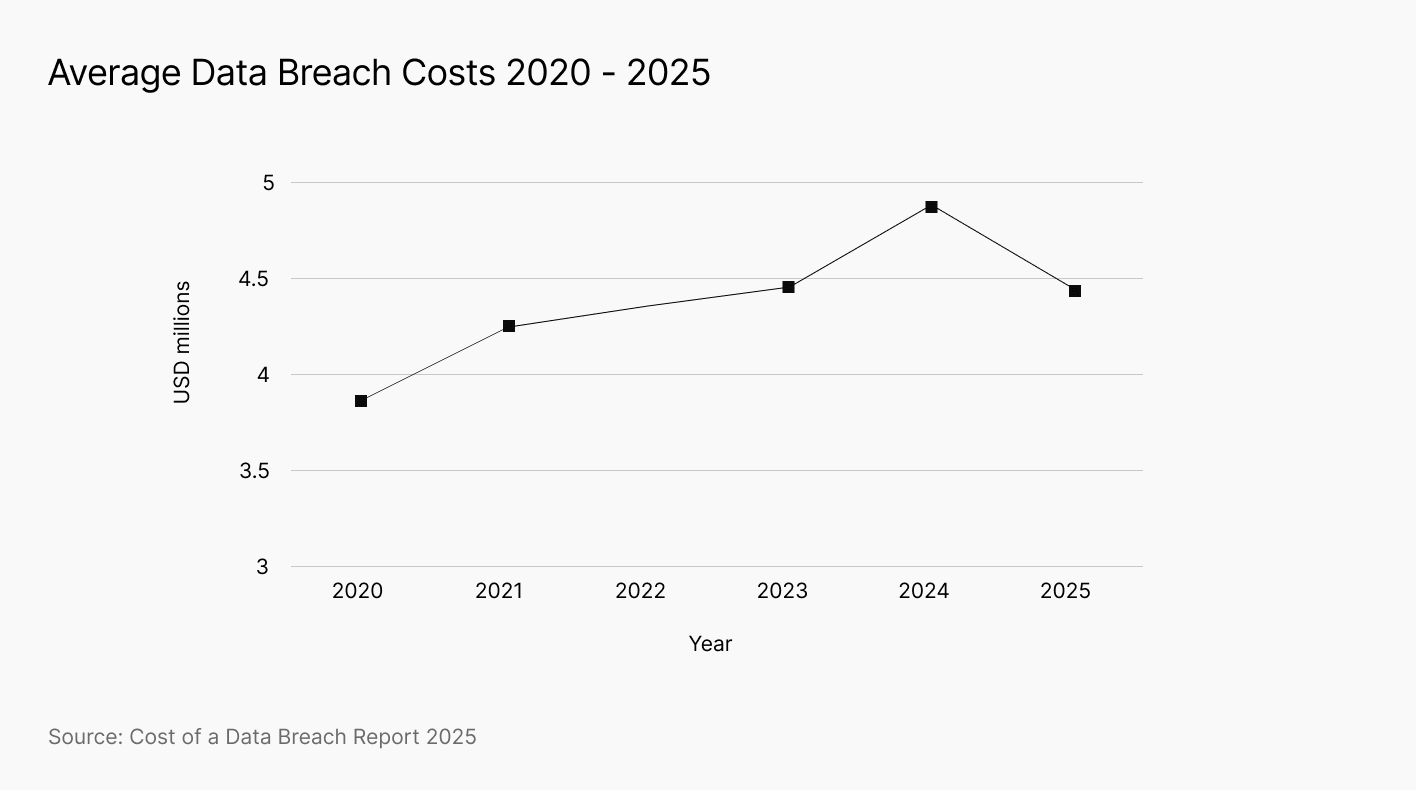

The findings of the 2025 Cost of a Data Breach report confirm AI's consequential influence in cybersecurity. Global data breach costs have declined for the first time in five years due to faster containment driven by AI-powered defences.

Essential tools for continuous monitoring

A modern cyber threat monitoring strategy relies on an integrated security stack where key technologies work together to provide comprehensive visibility and automate response. Understanding how these core platforms function is the first step toward building a resilient and efficient monitoring operation.

SIEM and SOAR platforms

At the core of many modern Security Operations Centers (SOCs) are two complementary platforms:

- Security Information and Event Management (SIEM)

- Security Orchestration, Automation, and Response (SOAR)

Together, they form the foundation for centralized detection and automated action.

Security Information and Event Management (SIEM) serves as the central nervous system for security operations. It aggregates log data from across the entire IT environment — including networks, cloud infrastructure, and endpoints — to detect anomalies and generate alerts.

A SOAR platform acts as the muscle, taking the alerts generated by the SIEM and automatically executing response actions through pre-defined "playbooks". This can include actions like isolating a compromised endpoint, blocking a malicious IP address, or creating a ticket for an analyst to investigate further.

The primary function of a SIEAM-SOAR combination is to accelerate the incident response lifecycle, directly shrinking the Mean Time to Detect (MTTD) and Mean Time to Respond (MTTR), which in turn minimizes the financial and operational impact of a cyber attack.

The primary function of a SIEAM-SOAR combination is to accelerate the incident response lifecycle, directly shrinking the Mean Time to Detect (MTTD) and Mean Time to Respond (MTTR), which in turn minimizes the financial and operational impact of a cyber attack.

Attack surface management

Effective cyber threat monitoring requires a complete and accurate understanding of your organization's digital footprint from an attacker's perspective. Attack Surface Management (ASM) platforms provide this crucial external view by continuously discovering, analyzing, and monitoring all of an organization's internet-facing assets. These platforms are designed to find exposures before attackers can exploit them, automatically scanning for risks like:

- Unknown and untracked assets, including shadow IT and forgotten subdomains.

- Exposed services and open ports.

- Known software vulnerabilities and misconfigurations.

- Unsecured AI or LLM endpoints.

Modern security strategies recognize that an organization's risk is not confined to its infrastructure; it extends to its entire supply chain. Because of this, siloed security tools can create dangerous blind spots. A comprehensive monitoring program requires a unified platform that combines external ASM for an organization's own assets, and those of across their supply chain with Third-Party Risk Management.

Platforms like UpGuard provide this continuous, external visibility, allowing security teams to discover and prioritize risks across their full digital footprint as well as the attack surfaces of their vendors, all from a single location

Watch this video to learn how UpGuard approaches Attack Surface Management:

Identity and access management solutions

A robust Identity and Access Management (IAM) framework is a cornerstone of modern threat monitoring. It is designed to ensure that only the right people and devices can access the right resources.

A critical component of IAM is enforcing the principle of least privilege, which dictates that users are granted only the minimum access rights necessary to perform their assigned functions. This practice is crucial for limiting the "blast radius" of a security breach. When an attacker compromises an account, a least-privilege model severely restricts their ability to move laterally, escalate privileges, and access sensitive data, effectively containing the threat from the outset.

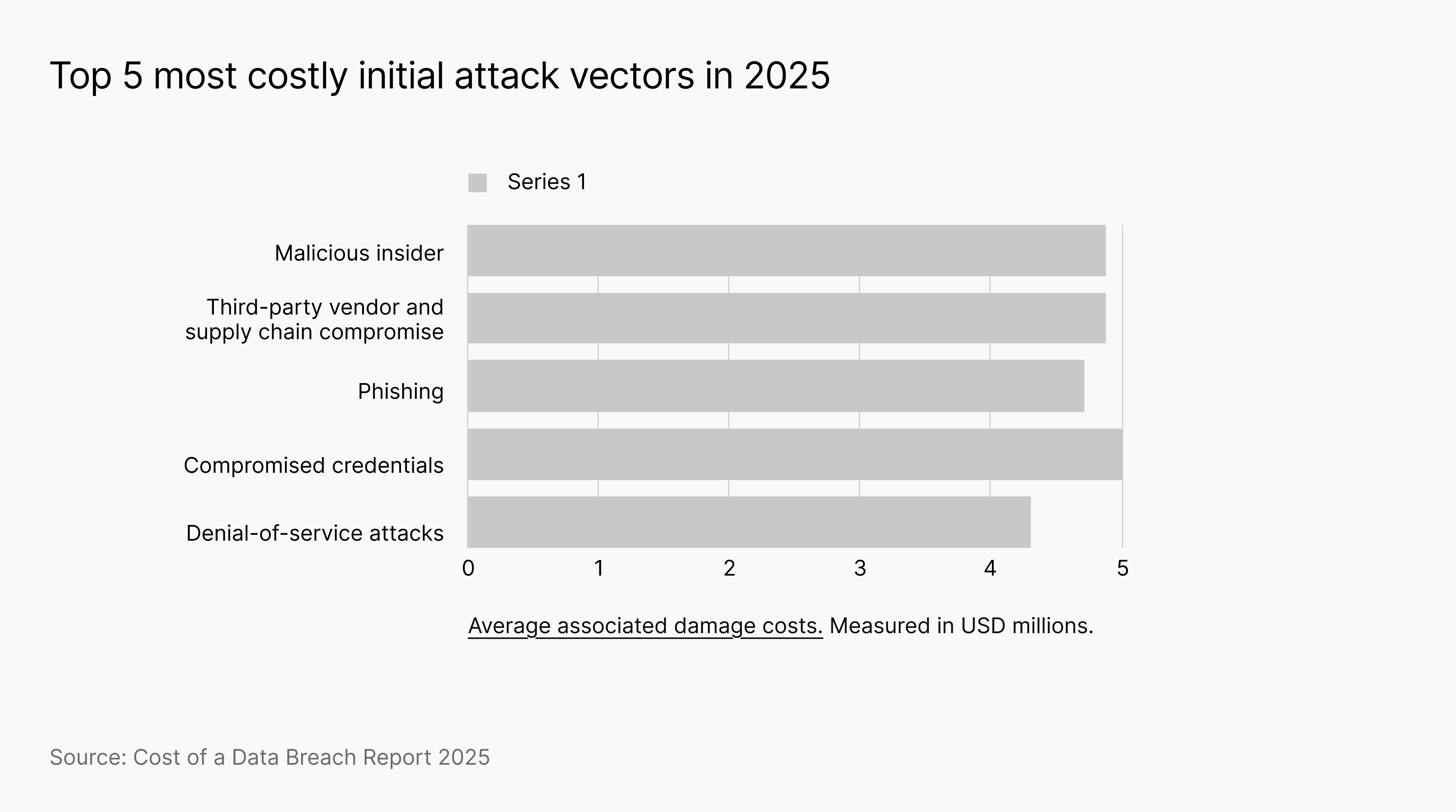

Beyond access controls, modern IAM solutions are integral to a proactive monitoring strategy. Since stolen credentials remain a top initial infection vector for attackers, implementing proactive security measures is essential.

This includes:

- Enforcing Phishing-Resistant Multi-Factor Authentication (MFA): Security experts strongly recommend moving beyond simple MFA methods to phishing-resistant options like FIDO2 security keys or certificate-based authentication. These methods are designed to thwart social engineering and credential theft attacks that can bypass weaker MFA implementations.

- Continuous Credential Monitoring: Modern security platforms continuously monitor the dark web for leaked corporate credentials. This serves as a vital early warning system, allowing security teams to detect when an employee's password has been exposed in a third-party breach and force a reset before it can be used maliciously. Compromised credential monitoring has become a staple feature of cyber threat detection tools.

AI-powered intelligence and triage

Perhaps the most significant evolution in threat monitoring is using artificial intelligence to automate the labor-intensive process of threat triage. Modern AI, especially Large Language Models (LLMs), can now function as a virtual Tier-1 analyst, sifting through immense volumes of data to surface only the most critical, relevant threats with the context needed for rapid response.

An AI-driven triage system automates the end-to-end process of identifying and preparing threats for remediation.

These platforms are designed to:

- Continuously Scan and Collect Data: They automatically monitor various sources, including dark web forums, ransomware blogs, paste sites, credential dumps, and malware logs.

- Analyze and Prioritize Threats: Using machine learning, the system analyzes findings and classifies them based on confidence level, relevance to the organization, and potential impact.

- Suppress Noise and Reduce Alert Fatigue: A major challenge with threat monitoring is the sheer volume of irrelevant chatter. AI-driven systems intelligently filter out duplicates, stale data, and low-confidence findings to present a curated stream of actionable intelligence, allowing security teams to focus on what matters.

- Integrate with Workflows: Findings are presented with context and integrated into remediation workflows where analysts can assign ownership, comment on findings, and track progress, transforming raw data into a structured incident response.

The strategic benefits of this approach are substantial, including faster cyber threat detection, a significant reduction in noise, improved accuracy from ML models trained on vast datasets, and contextual intelligence that enables more informed decisions.

Automating expert analyst tasks with LLMs

Modern LLMs can now perform specific, complex tasks that were once the exclusive domain of human cyber threat analysts. These capabilities are outlined in CTIBench, a benchmark designed to evaluate Large Language Models (LLMs) in Cyber Threat Intelligence (CTI) applications.

CTIBench comprises four cyber threat analyst tasks:

- CTI-MCQ: A multiple-choice question dataset assessing LLMs’ understanding of CTI standards, threats, detection strategies, mitigation plans, and best practices, based on authoritative sources like NIST, MITRE, and GDPR.

- CTI-RCM: A task requiring LLMs to map Common Vulnerabilities and Exposures (CVE) descriptions to Common Weakness Enumeration (CWE) categories, evaluating their ability to classify cyber threats.

- CTI-VSP: A task involving calculating Common Vulnerability Scoring System (CVSS) v3 scores, testing LLMs’ ability to assess the severity of cyber vulnerabilities using metrics like Attack Vector and Confidentiality Impact.

- CTI-TAA: A task where LLMs analyze threat reports to attribute them to specific threat actors or malware families, assessing their ability to identify correlations based on historical threat behavior.

While performance varies, benchmark tests show that leading models are already competent, with a clear advantage for models that are either very large or specifically trained on security data.

Best practices to strengthen cyber defenses

Security leaders must integrate these tools into a strategic monitoring program built on proactive discovery, documented response plans, and constant validation to stay ahead of a new age of faster and more efficient breach tactics.

1. Leverage AI and LLMs to scale threat monitoring

The ongoing cybersecurity skills shortage remains a major challenge, with over half of breached organizations reporting high levels of staffing shortages..

AI and LLMs offer a powerful solution to this problem by acting as a force multiplier for security teams. Modern LLMs can now perform many tasks that a cyber threat analyst would typically handle, such as gathering diverse threat intelligence, analyzing it for relevance, and correlating it with potential threats in their network environment.

This analysis, which could take a human analyst hours, can often be completed by an LLM in seconds. By leveraging these tools to automate routine analysis and intelligence gathering, security teams can scale their monitoring capabilities, freeing human experts to focus on more complex investigations and strategic defense.

2. Mapping the digital footprint

A core principle of cybersecurity is that you cannot protect what you're unaware of. A foundational best practice is the proactive and continuous discovery of every asset across your organization's digital footprint.

This process must go beyond periodic scans to provide a real-time inventory of all hardware, software, cloud instances, domains, IP addresses, and SaaS applications. Maintaining a current, automatically updated asset inventory is critical to preparing for incident response and serves as the foundation for the entire security program.

Learn more about some of the top cyber threat detection tools on the market.

This single source of truth is essential for accurately defining the scope of cyber threat monitoring, enabling effective vulnerability management, and allowing incident responders to understand the potential impact of a breach quickly.

3. Defining incident escalation paths

Ad hoc responses to cyber threats are a recipe for failure. A critical best practice is establishing a formal, documented Incident Response Plan (IRP) that provides a clear roadmap for action when an incident occurs.

According to NIST guidance, this plan should be based on a formal policy that defines roles, responsibilities, and authorities across the organization, clarifying who can make critical decisions like shutting down a system.

It should also establish clear guidelines for prioritizing incidents and define the communication paths for notifying leadership, legal teams, and other stakeholders.

To make the plan actionable, organizations should create technical "playbooks" with specific, step-by-step procedures for handling common threats like ransomware or phishing. This entire process should be overseen by a formally designated Computer Security Incident Response Team (CSIRT), which ensures that response activities are consistent, coordinated, and effective

4. Validating resilience with attack simulations

An incident response plan is only effective if it has been tested. To ensure readiness and build "muscle memory," organizations must validate their defenses and response processes through consistent, evidence-based attack simulations.

These controlled exercises are designed to reveal gaps in visibility, toolsets, and procedures before a real attacker can exploit them.

There are several key methods for validating resilience:

- Red Teaming: This is an adversarial exercise where a dedicated team simulates a real-world attacker's tactics, techniques, and procedures (TTPs) to test an organization's defenses under realistic conditions.

- Tabletop Exercises: These are discussion-based scenarios where security and business leaders walk through a simulated incident, such as a ransomware attack, to test the decision-making and communication workflows outlined in the Incident Response Plan.

- Purple Teaming: Unlike traditional red vs. blue team adverserial exercises, this is a collaborative approach where the red team (attackers) and blue team (defenders) work together. The red team executes specific attack techniques, and the blue team works to detect and respond in real-time, providing immediate feedback to improve security controls and tune detection rules on the fly.

How UpGuard can help

UpGuard provides a unified platform to help security teams master the challenges of modern threat monitoring. The platform combines proactive external attack surface management with advanced threat intelligence to provide a single, comprehensive view of your organization's risk.

UpGuard's AI-Driven Triage can automate the detection and prioritization of external threats. This includes monitoring for:

- Leaked corporate and employee credentials on the dark web.

- Exposed customer or proprietary company data.

- Mentions of your brand on illicit forums and marketplaces.

Within the platform, UpGuard's AI Threat Analyst acts as a virtual Tier-1 SOC analyst, streamlining your security operations by:

- Automatically filtering and prioritizing threats across the dark web, ransomware leaks, credential dumps, and malware logs.

- Suppressing noise and false positives, surfacing only high-confidence, actionable threats to reduce alert fatigue.

- Providing a collaborative investigation workspace with audit trails, owner assignments, and remediation tracking to accelerate triage and response.

By unifying external visibility with automated intelligence and a collaborative workflow, UpGuard empowers security teams to move from a reactive to a proactive defense, reducing risk and building a more resilient security posture.